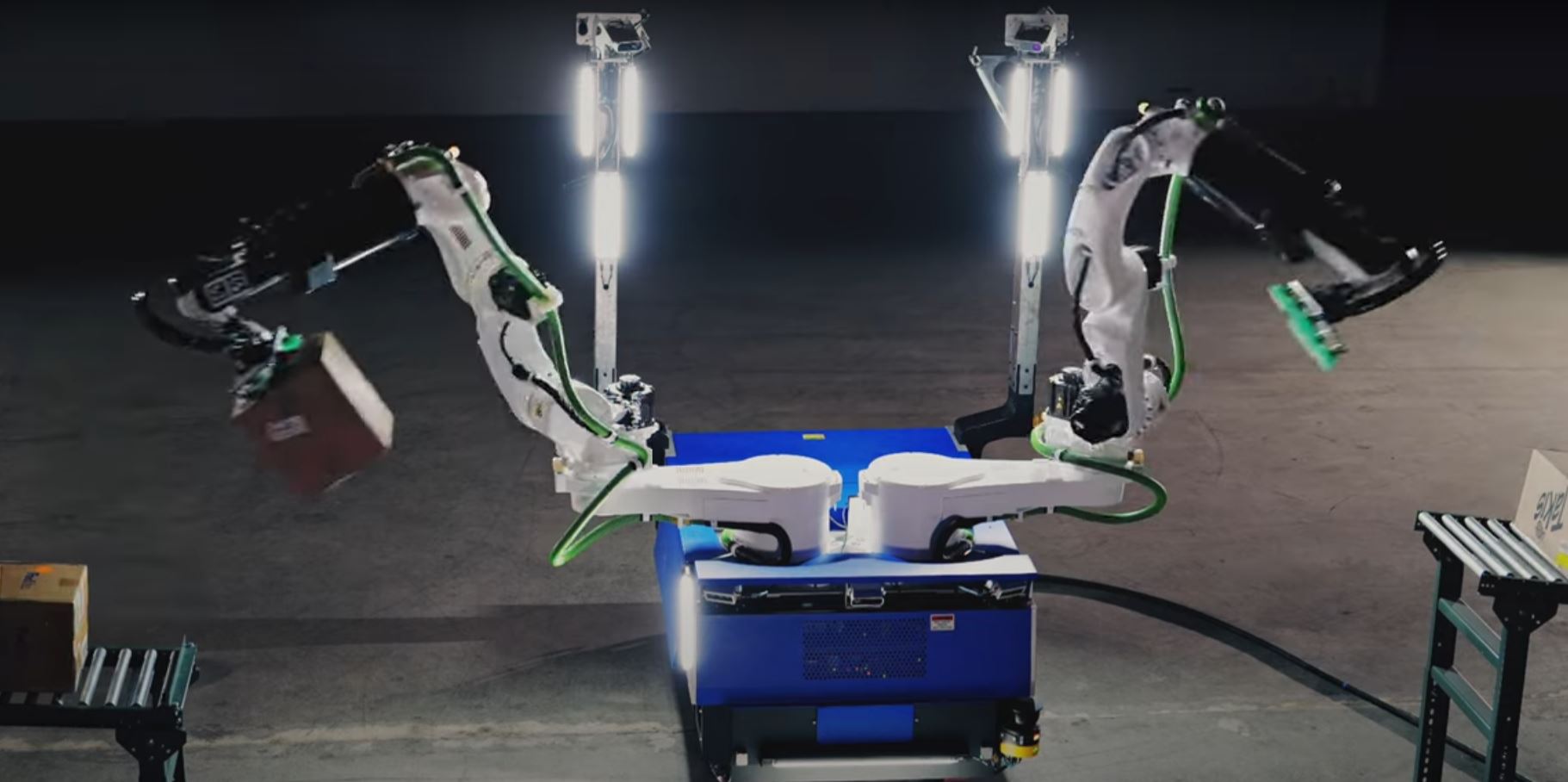

Dexterity Mech Dual-Arm Industrial Superhumanoid Robot

This is the Dexterity Mech: an AI powered robot that can operate in complex, unstructured environments. It can handle and move items like humans. Mech features 2 arms that it uses for palletizing and truck loading. Its vertical reach extends beyond 8 feet.

The world’s first industrial superhumanoid robot?

Dexterity has released Mech – a superhumanoid, powered by Physical AI, to operate in complex, unstructured environments across industrial sites.

Unlike traditional robots, Mech moves with the skill of a human but has the… pic.twitter.com/FUeuiMGhuN

— Circuit (@circuitrobotics) March 20, 2025

Mech’s arms have 5.4m armspan and can lift 60kg of load. Mech has an AI powered touch/pressure sensing system. The above video shows it in action.

[HT]