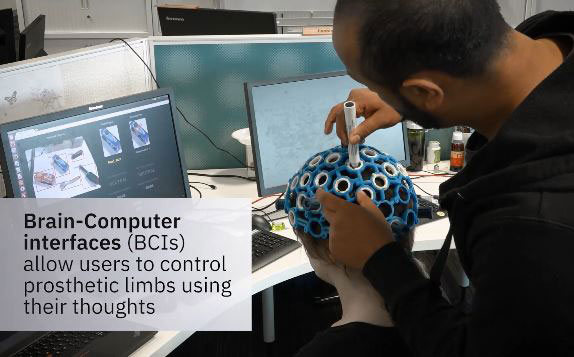

Plenty of researchers are working on building more advanced prosthetic devices. IBM have used AI to explore the idea of controlling prosthetic limbs through thought. The used the OpenBCI headset to read the intentions of the test subjects. As they explain:

Must see robots & drones:

Once intended activities were decoded, we translated them into instructions for a robotic arm capable of grasping and positioning objects in a real life environment. We linked the robotic arm to a camera and a custom developed deep learning framework, which we call GraspNet. GraspNet can determine the best positions for the robotic gripper to pick up objects of interest.

More information about this cool research is available here.

*Our articles may contain aff links. As an Amazon Associate we earn from qualifying purchases. Please read our disclaimer on how we fund this site.