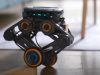

Researchers are always looking for new ways to make it easier to teach robots new commands. Anh Nguyen, Dimitrios Kanoulas, Luca Muratore, Darwin G. Caldwell, Nikos and G. Tsagarakis have presented a paper that explores translating videos to commands with deep recurrent neural networks. The framework extracts features from the video and uses two RNN layers with an encoder-decoder architecture to encode the visual features and generate the commands.

More like this ➡️ here

Translating Videos to Commands for Robotic Manipulation with Deep Recurrent Neural Networks

[Paper]

*Our articles may contain aff links. As an Amazon Associate we earn from qualifying purchases. Please read our disclaimer on how we fund this site.